Why AWS DMS fails at Replication and Change Data Capture

Database Migration Service - not Replication

AWS DMS is a common to choice for database replication on AWS - until it isn’t. What starts as a "quick setup" turns into weeks of debugging, task restarts, and missed data. In this post, we break down the technical reasons why AWS DMS fails at reliable CDC replication, and how Popsink solves these problems by design. No fluff - just facts, examples, and comparisons that matter to data engineers.

1. Designed for migration, not continuous replication

When AWS Database Migration Service (DMS) was launched in 2016, its primary purpose was clear: to help enterprises move their database workloads to the cloud. It was a response to the growing demand for lift-and-shift strategies during the early days of cloud adoption, when migrating Oracle, SQL Server, or MySQL databases to AWS RDS or Aurora was the core use case. DMS was optimized for full initial loads followed by basic CDC replication to keep up - not for long-running, real-time replication pipelines.

That legacy shows. Today, many data teams try to use DMS for always-on replication, only to face broken expectations: dropped events, lag during bursts, and inconsistent recovery behaviors when things go wrong.

There’s a reason it’s called the Database Migration Service, not the Database Replication Service. Its architecture reflects a tool built to move data once, not to keep it synced forever.

2. Performance bottlenecks with high-volume CDC

CDC replication at scale is fundamentally different from basic migration. It requires sustained throughput, low latency, and consistent handling of spikes in change volume all while preserving transactional order and correctness. AWS DMS was not designed to handle this gracefully.

DMS tasks are single-threaded by default and operate with limited internal parallelism. This means that under high-change-rate workloads (for example: when thousands of rows change per minute) DMS can fall behind quickly, resulting in replication lag that compounds over time. Moreover, there is no automatic scaling: users must manually size replication instances and shard tasks by table, schema, or key range to increase throughput.

Adding to the complexity, DMS relies on intermediate caches and buffers stored on the replication instance. If those buffers overflow as is the case when the target slows down, DMS may throttle, drop events, or get stuch, which requires task recreation.

From a technical perspective, the legacy of DMS shows in both initial load and ongoing replication modes:

Initial Load Weaknesses

- No support for consistent snapshotting across multiple tables - joins and referential integrity can break.

- Target schemas must be manually aligned unless using AWS SCT (which is limited).

- Table reloads are destructive - DMS drops and re-creates target data unless specially configured.

CDC Replication Weaknesses

- Changes are applied row-by-row, not transactionally - no guarantee of atomicity across related updates.

- Only single-threaded apply loop per task; limited throughput and high latency under write-heavy loads.

- Schema changes midstream (like ALTER TABLE ADD COLUMN) may cause DMS to ignore new columns or fail silently unless tasks are stopped and reloaded.

As a result, teams often start with DMS for simplicity - and end up maintaining fragile, low-throughput pipelines that require constant reloading, monitoring, and manual repair.

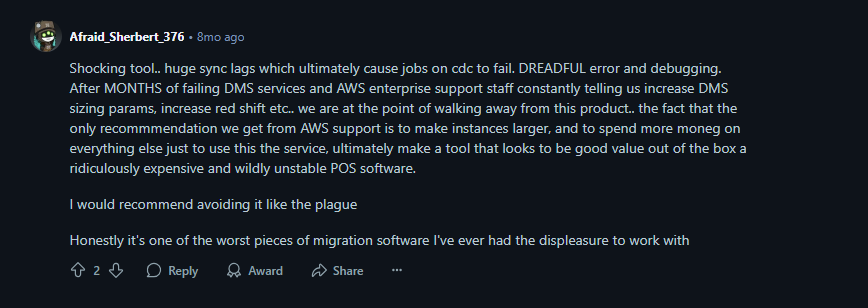

Tl;dr: “Sometimes works, sometimes doesn’t” - warclaw133 on Reddit

3. High human management overhead

One of the most frustrating aspects of running AWS DMS in production isn’t just what it fails to do - it’s how much of it you have to do yourself.

With AWS DMS, when something breaks, the most reliable fix is often to reload the entire task from scratch. That might be fine if you’re syncing a handful of lookup tables. But for business-critical, high-volume workflows, reloading isn’t just a hassle which it’s a serious risk to data integrity and team bandwidth.

Despite the promise of a managed service, DMS tasks often become a patchwork of manual configurations, fragile mappings, and constant babysitting. Need to handle schema changes? You’ll likely have to stop the task, edit the JSON mapping manually, rebuild the target schema, and then relaunch everything from scratch with no schema versioning or validation support in place - hoping that no rows are missed or duplicated in the process.

Scaling replication to meet higher volumes? You’re in for more work. Because each DMS task is essentially single-threaded so horizontal scaling requires manual sharding: creating multiple tasks, sizing and allocating replication instances per workload, and configuring filters for each table or key range. Every new task becomes a new point of failure and a new maintenance job. Each task then needs its own monitoring, error handling, and rollback strategy, turning your replication layer into a brittle, bespoke ecosystem.

And if you’re dealing with LOB columns (large JSON objects, texts, mails…), DMS enters full lookup mode, issuing an extra read query per change event. That’s not just inefficient, it’s dangerous. Under pressure, those lookups can trigger source-side locks, slow down the replication task, and cause it to silently hang with no actionable diagnostics.

All of this translates to real-world operational debt: custom scripts to restart tasks, manual reconciliation for missed events, duplicated alert logic, and an ever-growing matrix of task-level failure points.

Task and Pipeline Management

- Most operations require manual updates, restarting tasks, and full table reloads.

- Scaling throughput involves manually creating and managing multiple replication tasks, each with its own filters, endpoints, and monitoring setup.

- Task failures are often non-recoverable without restart, even for transient issues like timeouts or target-side slowdowns.

- Replicating tables with LOB (Large Object) columns often requires Full LOB mode, which introduces per-event source lookups that degrade performance and, depending on the source database, can cause locking or contention.

- Choosing between Full, Limited, or Inline LOB modes requires manual tuning, often with trade-offs in performance vs. completeness.

In short: DMS doesn’t reduce operational load - it shifts it onto your team, forcing them to become part-time SREs for fragile data pipelines. In contrast, Popsink automates schema tracking, scales natively with throughput, and treats LOBs and high-volume CDC events as first-class citizens so you can focus on data, not damage control.

4. Poor schema evolution handling

Modern applications evolve fast and so do their schemas. Columns get added, renamed, or dropped; data types change; new tables are introduced regularly. A production-grade replication system must adapt dynamically to these changes without breaking.

Unfortunately, AWS DMS was never built with robust schema evolution in mind. While it offers basic support for adding columns, it does not automatically propagate all changes to the target. More complex operations - like dropping columns, altering data types, or adding nested structures - require manual intervention, and may even cause the replication task to fail silently or halt entirely.

There’s no concept of schema versioning, contract tracking, or graceful transformation. The burden falls on the data engineer to monitor for changes, manually adjust mappings, and often restart tasks from scratch.

Schema Change Handling Limitations

- Only basic changes like ADD COLUMN are sometimes supported. And even then, only if the task is configured to allow it.

- Type changes, column drops, or reordering are not supported midstream and require task re-creation or full table reloads.

- New columns are not automatically added to the target table unless you pre-enable includeOpForFullLoad and configure custom mapping rules.

- If a column is added at the source and DMS isn’t reconfigured, it may ignore the column entirely, leading to incomplete or inconsistent target tables.

- In some cases, tasks continue running "successfully" while silently dropping new fields with no alerts or validation failures.

- DMS does not maintain schema history or version tracking, making it hard to diagnose drift or retroactively recover from issues.

5. Limited error recovery and observability

In production-grade replication, things go wrong. Network blips, permission errors, schema mismatches, and bursty loads are inevitable, and a good replication tool should handle them gracefully, recover automatically, and tell you exactly what happened when things break.

AWS DMS, however, provides only basic error handling mechanisms and limited observability. If a write fails at the destination, the default behavior may be to log the error and move on, retry once, or in some cases silently discard the operation depending on task settings. Worse, the service can hit an internal error threshold and stop the task altogether, requiring a manual restart - with no guarantee that skipped events will be reprocessed.

There’s no built-in dead letter queue, no stateful retry queue, and no way to re-ingest failed events unless you restart the entire task or write external tooling to monitor log gaps.

Monitoring and Debugging

- Error detection is reactive: requires manual log inspection in CloudWatch, with no per-event traceability or built-in alerting.

- Failures in target write operations (e.g., type mismatches, permission issues) may not halt the task — instead, errors are logged silently, leading to undetected data gaps.

- No support for dead-letter queues, retries with state, or automated backfill — all of which must be implemented externally.

6. Restricted to the AWS ecosystem

One of the most limiting aspects of AWS DMS is that it's deeply embedded within the AWS ecosystem. While it supports various database engines as sources and targets, the service assumes you’re replicating to or within AWS, making it cumbersome for hybrid or multi-cloud architectures.

DMS requires that all endpoints - whether on-premises or in other clouds - be accessible via AWS VPCs, Direct Connect, or VPN tunnels. Replicating to destinations like Google BigQuery, Azure SQL, or Snowflake hosted outside AWS often involves complex networking workarounds or intermediate storage layers (e.g., landing data in S3 and piping it manually from there). There’s no native support for event-driven APIs, Kafka endpoints, or other common real-time consumers outside of AWS services.

Monitoring and control are also tightly coupled with AWS-native tooling like CloudWatch, IAM, and the DMS Console - all of which assume you're managing infrastructure entirely within AWS.

AWS DMS vs. Popsink for CDC replication

Choosing a replication solution isn’t just about ticking feature boxes — it’s about minimizing operational risk and enabling future-proof data flows. The table below summarizes key differences between AWS DMS and Popsink from a technical and operational standpoint.

AWS DMS is suitable for short-lived migrations or low-volume change streams within the AWS ecosystem but it shows clear limitations for real-time, scalable, and reliable CDC replication. Popsink was built for continuous data delivery, schema agility, and automated, low-maintenance operations - making it a better fit for modern data teams with production-grade needs.

Conclusion: choose the right tool for the right job

AWS DMS has served a valuable purpose - and still does - for basic lift-and-shift database migrations, particularly within the AWS ecosystem. But as data needs evolve toward always-on, multi-cloud, real-time data architectures, its limitations become increasingly painful for data engineering teams.

- It wasn’t built for continuous replication.

- It doesn’t handle schema evolution reliably.

- It lacks robust error recovery, and it falters under pressure.

In short: DMS is a migrator - not a replicator.

If you’re replicating critical business data, powering real-time analytics, syncing operational systems, or delivering CDC to external consumers — you need something that was built from the ground up for reliability, flexibility, and scale.

That’s where Popsink comes in: a platform that delivers real-time, fault-tolerant replication, adapts to schema changes, runs anywhere, and provides enterprise-grade visibility — without the duct tape.